Contact us : contact@digitechgenai.com

Introduction

LLMs have revolutionized the AI world. Industry leaders like OpenAI’s ChatGPT and Google’s LaMDA are pioneering chatbot development. Developers can now create intelligent conversational systems with these available technologies.

Spring AI emerges as a standout in the Spring Framework ecosystem. It connects smoothly with LLMs of all types. The combination of Spring AI and Ollama, an open-source library supporting Meta’s llama3, creates a resilient foundation for sophisticated chatbots. These frameworks make it easier to add features like conversational history and context-aware responses.

This piece shows you how to build a practical help desk chatbot that troubleshoots user issues. You’ll discover ways to utilize these powerful tools and implement key features like prompt engineering and conversational memory to make your chatbot work better.

Understanding Chatbot Technology in 2025

Chatbots have changed a lot in recent years. Smart chatbots will become essential business tools across industries by 2025. Projections suggest they will become the primary customer service channel for approximately 25% of organizations by 2027

What are smart chatbots?

Smart chatbots in 2025 are sophisticated AI-driven conversation systems that go beyond their rule-based predecessors. Traditional chatbots used pre-programmed scripts and

keyword matching. Today’s intelligent chatbots learn from each interaction and keep getting better at their responses.

Smart chatbots are programs that copy human conversations through text or voice. Their intelligence comes from knowing how to understand context, process nuance, and respond naturally. Modern chatbot platforms can now detect emotions through sentiment analysis and adjust their tone to match the situation.

Companies using smart chatbots see great results. To name just one example, some organizations have reached an . This shows how well these systems handle real-life problems without human help.88% self-resolution rate for customer questions

How LLMs power modern chatbots

Modern chatbots’ amazing capabilities come straight from Large Language Models (LLMs). These advanced AI systems train on massive text datasets—often processing trillions of tokens. This lets them understand and create human-like text with impressive accuracy.

LLMs split language into smaller pieces called tokens (about three-quarters of a word in English). This process helps them analyze word connections and patterns to create a complete map of language relationships. The foundation helps chatbots to:

- Understand natural language including slang, ambiguous phrases, and incomplete sentences

- Keep track of conversation context across multiple interactions

- Create dynamic, relevant responses

- Learn from past conversations

- Handle complex scenarios that confuse rule-based systems

LLM-powered chatbots stand far ahead of traditional ones. Old chatbots relied on fixed scripts with strict response patterns that limited complex question handling. LLM solutions offer flexible, smart responses that adapt to user’s needs. Users enjoy more engaging experiences because the chatbot’s responses feel natural and conversational.

Modern chatbot development tools have made big steps forward in natural language understanding (NLU). Systems now catch subtle hints like sarcasm, disappointment, or excitement. This emotional awareness helps chatbots tailor their responses appropriately and create genuine human-like interactions.

Benefits of using Ollama and Spring AI

Developers building advanced chatbots in 2025 can utilize Ollama and Spring AI’s powerful foundation. Ollama serves as a user-friendly platform to run Large Language Models locally. Its streamlined API and flexible tools make it perfect to build conversational AI efficiently.

Spring AI has become the newest addition to the Spring Framework family. Java developers can now work easily with various LLMs through chat prompts. This creates a smooth development experience that uses Spring ecosystem’s strength and scalability.

Ollama’s tool support for LLMs marks a notable advance. Models can now decide when to call external functions and use returned data. Chatbots can access live information or do complex calculations on demand, which greatly expands what they can do.

Spring AI takes this idea further by connecting it with the Spring ecosystem. Java developers now have a powerful way to build AI-enhanced applications. This opens new possibilities to create responsive AI systems that work with real-life data and services.

These tools give businesses the perfect mix of power and ease of use to build chatbots. Anyone can use Ollama’s open-source platform to run AI models locally. Spring Boot’s solid support makes it ideal for enterprise-level chatbot solutions.

Building chatbot architecture has become quicker. Developers can now focus on creating smart features instead of dealing with complex infrastructure.

Setting Up Your Development Environment

A solid foundation is the key to building a great chatbot. I need a proper development environment that supports Ollama and Spring AI technologies before I start coding. This significant first step will give a smooth path to implement complex features later.

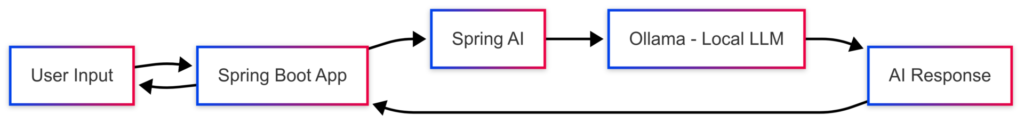

By the end of this tutorial, you’ll have a chatbot architecture like the one shown in the schema below, demonstrating the seamless integration of Ollama & Spring Boot.

Required software and tools

Several core components are needed to build a chatbot with Ollama and Spring AI. My computer needs at least 8GB of RAM to work well. Some advanced models might need extra resources, especially when running larger LLMs on your machine.

Here’s what you need in your software toolkit:

- Java Development Kit (JDK) – Needed for Spring Boot applications

- Python – Useful for AI implementations and testing

- Git – Helps manage and control code versions

- IDE – PyCharm, Visual Studio Code, or IntelliJ work great for Java development

You’ll also need package managers like pip (Python) or Maven/Gradle (Java) to handle dependencies. A Python virtual environment helps keep project dependencies separate and prevents conflicts.

Version control plays a vital role during implementation. Git lets you track changes and work together with others while keeping a complete history of your chatbot development. GitHub or Bitbucket repositories add more control and documentation options.

Installing Ollama on different platforms

Each operating system has its own Ollama installation process. On macOS, you just need to download from the , unzip the file and move the Ollama.app folder to Applications official website

Windows users have it easy – download the executable from Ollama’s website and run it. The setup finishes on its own.

Linux users can install Ollama with this command:

curl -fsSL https://ollama.com/install.sh | shStart Ollama by typing ollama serve in the terminal. You can check if it’s running with ollama -v or by visiting in your browser. Ollama uses. http://localhost:11434/port 11434 by default

Linux users can create a systemd service to start Ollama automatically. This keeps the service running and restarts it after system reboots:

sudo nano /etc/systemd/system/ollama.serviceAdd the service configuration and enable it:

sudo systemctl daemon-reload

sudo systemctl enable ollamaConfiguring Spring Boot for AI development

Spring AI makes chatbot architecture design easier through its integration with various LLM platforms, including Ollama. Let’s set up a new Spring Boot project with Spring AI support.

Start by creating a project on Spring Initializr (start.spring.io) with these settings:

- Project: Maven

- Language: Java

- Spring Boot: 3.3.4 (or latest version)

- Dependencies: Spring Web, Spring AI

Now set up the connection to your Ollama instance. Add these lines to your application.properties file:

spring.ai.ollama.base-url=http://127.0.0.1:11434 spring.ai.ollama.chat.options.model=llama3 spring.ai.ollama.chat.options.temperature=0.7The base-url connects to your local Ollama service. The model setting picks your LLM, while temperature controls response randomness. Higher values make responses more creative, lower values make them more predictable.

Spring Boot’s autoconfiguration gives you a ChatClient.Builder instance. This becomes your starting point to create a prototype that talks to your chosen LLM.

These configurations are flexible. You can add default system text in a configuration class to avoid repeating prompt instructions in your code.

Getting Started with Ollama

The development environment is ready. Let’s start working with Ollama. This powerful tool makes chatbot development easier by letting you run sophisticated LLMs on your machine. We’ll learn how to run our first model and see what Ollama can do to create intelligent conversational systems.

Running your first LLM model

Getting your first model up and running is simple after installing Ollama. Open your terminal and type:

ollama run llama3This command does two things – it downloads the Llama 3 model if you don’t have it yet and starts an interactive chat session. You can replace “llama3” with any other supported model from Ollama’s library. Popular choices include Mistral, Gemma 2, or LLaVA.

The model loads and shows a prompt to start chatting right away:

>>> Send a message (/? for help)Yes, it is true that modern chatbot architecture design & development starts with this simple step. You can test how different models handle various prompts. To end the chat session, type /bye and press Enter.

Testing different models helps you find the right fit if you’re working on custom chatbot development services. A smaller 3B parameter model might be enough for basic tasks. Larger 7B or 13B parameter models work better for complex enterprise AI chatbot development.

Understanding Ollama’s capabilities

Ollama comes with several features that boost chatbot development frameworks. The essential commands to manage models include:

- ollama list – Displays all downloaded models

- ollama rm modelname – Removes a specific model

- ollama pull modelname – Updates or downloads a model

Custom model creation through Modelfiles is one of Ollama’s standout features. It works like Dockerfiles but for LLMs. This feature is a great way to get started with AI chatbot development services. You can fine-tune model behavior through system prompts and parameter adjustments.

Ollama also provides a RESTful API at for direct app integration. The API opens up many possibilities for http://localhost:11434 chatbot app development. Developers can add LLM features without complex setups or cloud services.

Multi-modal support in Ollama matters for teams building chatbot development tools. Models like LLaVA can work with both images and text. This creates versatile chatbot apps that can “see” and respond to visual content.

Common troubleshooting tips

Ollama is user-friendly, but you might run into some issues. Most problems have simple solutions.

Network configurations can cause problems when using Ollama with Docker. Ollama usually listens on localhost, which can make Docker containers fail to connect. Here’s how to fix it:

- For Ollama in Docker: Bind to 0.0.0.0 inside the container and publish port 11434

- For applications in Docker connecting to Ollama: Use host.docker.internal (Windows/MacOS) or 172.17.0.1 (Linux) instead of localhost

IPv6 setups can also cause issues. Connection attempts might fail if your computer uses IPv6 but Ollama listens on an IPv4 address. The fix is to use the specific IPv4 address 127.0.0.1 instead of localhost in your configurations.

Resource allocation is crucial for chatbot development service for websites. Models need specific amounts of RAM: 7B models need 8GB, 13B models need 16GB, and 33B models need 32GB or more.

A final tip: check if the server is running with ollama serve when Ollama won’t accept connections. Many people run ollama run model-name alone without starting the server component needed for API access.

Building Your First Chatbot with Spring AI

Spring AI and Ollama configuration sets the stage to explore the practical side of chatbot development. The Spring AI module offers a simplified way to create intelligent conversational interfaces. These interfaces understand and respond to user queries accurately.

Creating the project structure

A well-laid-out project structure creates the foundation for effective chatbot architecture design & development. The standard Spring Boot application structure works best with some AI-specific additions:

- controller package – Contains REST endpoints that expose chatbot functionality

- model package – Houses request/response classes for chat interactions

- service package – Implements chatbot business logic and LLM interactions

- config package – Manages Spring Bean configurations for AI components

This modular approach matches Spring’s philosophy and separates concerns cleanly. The organization makes code reusable and maintenance becomes easier as your chatbot development project grows.

Setting up dependencies

Spring AI requires specific dependencies in the project. Here’s what you need in your Maven pom.xml:

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0-M1</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-ollama-spring-boot-starter</artifactId>

</dependency>The application properties need configuration to connect with the Ollama server:

spring:

ai:

ollama:

base-url: http://localhost:11434

chat:

options:

model: llama3Implementing basic chat functionality

The foundation lets you create a basic controller that handles chat interactions. Spring AI makes chatbot development frameworks simple:

@RestController

@RequestMapping("/helpdesk")

public class HelpDeskController {

private final HelpDeskChatbotService helpDeskChatbotService;

// Constructor with dependency injection

@PostMapping("/chat")

public ResponseEntity<HelpDeskResponse> chat(@RequestBody HelpDeskRequest helpDeskRequest) {

var chatResponse = helpDeskChatbotService.call(

helpDeskRequest.getPromptMessage(),

helpDeskRequest.getHistoryId()

);

return new ResponseEntity<>(new HelpDeskResponse(chatResponse), HttpStatus.OK);

}

}Enterprise AI chatbot development needs request and response models:

public class HelpDeskRequest {

@JsonProperty("prompt_message")

String promptMessage;

@JsonProperty("history_id")

String historyId;

// getters, no-arg constructor

}

public class HelpDeskResponse {

String result;

// all-arg constructor

}The service layer handles the core functionality. You can implement conversational memory here to create context-aware responses:

@Service

public class HelpDeskChatbotService {

private final OllamaChatClient ollamaChatClient;

private static final Map<String, List<HistoryEntry>> conversationalHistoryStorage = new HashMap<>();

public String call(String userMessage, String historyId) {

// Implementation details for maintaining conversation context

// and generating responses through the Ollama client

}

}This structure shows how chatbot development tools like Spring AI make advanced features easier to implement.

Testing your chatbot

Quality standards require full testing of custom chatbot development services. Spring AI comes with specialized evaluators:

@Test

void testRelevance() {

String question = "How can I reset my password?";

ChatResponse chatResponse = chatClient.prompt()

.user(question)

.call()

.chatResponse();

String answer = chatResponse.getResult().getOutput().getContent();

List<Document> documents = chatResponse.getMetadata()

.get(QuestionAnswerAdvisor.RETRIEVED_DOCUMENTS);

EvaluationRequest evaluationRequest = new EvaluationRequest(

question, documents, answer);

EvaluationResponse evaluationResponse =

relevancyEvaluator.evaluate(evaluationRequest);

assertThat(evaluationResponse.isPass()).isTrue();

}Manual testing through web interfaces or command-line tools helps understand the user experience better. This step becomes vital for chatbot development service for websites as it shows both technical functionality and user interaction patterns.

Spring AI helps developers create sophisticated conversational systems faster without complex implementation details getting in the way.

Adding Intelligence to Your Chatbot

The next significant step in chatbot development comes after setting up simple chatbot functions. Adding intelligence makes interactions natural and valuable. This enhancement turns question-answer systems into sophisticated conversational agents that users perceive as remarkably human-like.

Implementing conversational memory

Simple chatbots often fail because they can’t remember previous interactions. Each user message gets processed independently when memory is absent, which leads to disconnected conversations. Several memory types can address this challenge in custom chatbot development services.

Buffer memory offers the simplest solution by storing conversation history directly in prompts. This straightforward approach uses too many tokens during longer conversations.

Summary memory provides a better option for chatbot app development. It condenses previous exchanges into a single narrative and saves tokens. Entity memory takes a different approach by extracting specific details like customer names or priorities throughout the conversation.

The ConversationSummaryBufferMemory class in frameworks like LangChain combines both approaches. It summarizes older interactions while keeping recent exchanges unchanged. This balanced method suits most enterprise AI chatbot development needs perfectly.

Creating context-aware responses

Chatbot interactions feel natural when systems can reference previous exchanges. Chatbot development services need these elements to make this happen:

- A unique conversation ID stores each prompt and response

- Current prompts include complete conversational history

- Specialized classes like RunnableWithMessageHistory handle history retrieval automatically

Research shows that context-aware chatbots deliver more consistent and tailored experiences. Users can resume conversations from where they stopped—a vital feature for chatbot development service for websites.

Handling different user intents

Intent classification drives intelligent chatbot architecture design & development. Systems learn to understand and categorize user messages based on their core purpose through this process.

Modern chatbot development frameworks identify user goals accurately through natural language processing (NLP) and machine learning algorithms. Studies reveal that proper intent classification helps businesses reduce customer support handling times by 77%

Effective chatbot development tools must recognize these intent types:

- Navigational intents guide users through platforms

- Informational intents answer questions about products/services

- Transactional intents support purchases or bookings

- Support intents troubleshoot technical issues

Proper intent recognition makes a substantial difference. Industry statistics show that , and 70% rated their experience positively. Becoming skilled at these intelligent features remains essential to create truly effective conversational agents. 88% of clients interacted with chatbots in 2022

Enhancing Your Chatbot with Advanced Features

Your chatbot can go from good to great by adding advanced features that create natural and versatile interactions. Modern chatbot development now goes beyond simple question-answering to create truly intelligent conversations.

Multi-turn conversations

Traditional chatbots see each interaction as separate, which creates disconnected experiences. Multi-turn conversational systems remember previous exchanges and keep track of context throughout the dialog. Users can refer to earlier statements without having to repeat themselves.

Building multi-turn conversations needs proper context retention systems. A newer study shows that , which lets users continue conversations naturally. This approach proves especially useful for context-aware chatbots deliver more consistent experience senterprise AI chatbot development where customer questions often need multiple exchanges.

To implement this functionality with Spring AI:

// Using Spring AI's ChatMemory interface

// This maintains conversation context across interactions

ChatMemory chatMemory = new ConversationBufferMemory();

The ConversationSummaryBufferMemory approach provides the right balance—it summarizes older exchanges while keeping recent interactions word-for-word.

Integrating with external APIs

Powerful chatbots connect to external systems and data sources. Ollama’s tool support and Spring AI’s function calling features let chatbots access live information and perform dynamic tasks.

First, register Java functions as Spring beans:

@Bean

public Function<WeatherRequest, String> weatherFunction() {

return request -> weatherService.getWeather(request.location);

}

When users ask weather-related questions, your model knows when to call this function and includes returned data in responses. Solo Brands has used this capability to boost resolution rates from 40% to 75%.

Adding personality to your chatbot

Great user experiences come from chatbots with distinct personalities. Custom personas make interactions engaging and fun because they make the chatbot feel more human.

The SYSTEM prompt helps shape your chatbot’s tone and behavior. To name just one example, see:

SYSTEM You are Chiti, a friendly AI assistant who speaks concisely and occasionally uses humor. You’re knowledgeable about technology but explain concepts in simple terms.

You can also extend base models using Modelfiles to create custom personalities without retraining. This method works great for adapting responses to specific business needs or audience priorities.

These advanced features help your chatbot development services create exceptional conversational experiences that feel natural, helpful, and uniquely human.

Conclusion

Ollama and Spring AI open up new possibilities to build smart chatbots in the world of conversational AI. This piece shows how these tools make complex LLM implementations simpler. They enable advanced features like context awareness and multi-turn conversations.

Ollama’s local model hosting paired with Spring AI’s reliable framework creates limitless opportunities for chatbot development. Developers can now craft intelligent interactions instead of dealing with complex setups. On top of that, features like conversational memory and external API integration help create chatbots that feel more human.

Success stories show how well-built chatbots affect businesses by optimizing customer service and boosting user satisfaction. The technology might seem daunting at first, but the development process becomes achievable when broken down into smaller steps.

Building effective chatbots needs constant refinement and testing. You should start with simple implementations and collect user feedback. Advanced features can be added as your expertise grows. The world of conversational AI looks bright, and these tools make it accessible to every developer.

Stay ahead with AI innovations and Java best practices—Follow Digitechgenai.com

FAQs

Q1. How can I get started with building an AI chatbot using Ollama and Spring AI?

To begin, set up your development environment by installing the necessary software, including Java, Python, and an IDE. Then, install Ollama on your preferred platform and configure Spring Boot for AI development. Start by running a basic LLM model with Ollama and gradually build your chatbot’s functionality using Spring AI’s framework.

Q2. What are the key benefits of using Ollama and Spring AI for chatbot development?

Ollama allows you to run sophisticated LLMs locally, providing flexibility and control over your models. Spring AI simplifies integration with various LLM platforms and offers robust support for building scalable applications. Together, they create a powerful foundation for developing intelligent, context-aware chatbots with features like conversational memory and external API integration.

Q3. How can I make my chatbot more intelligent and context-aware?

Implement conversational memory to retain information from previous exchanges, allowing for more natural multi-turn conversations. Use intent classification to understand and categorize user messages based on their underlying purpose. Additionally, integrate external APIs to access real-time information and perform dynamic tasks, enhancing your chatbot’s capabilities and relevance.

Q4. What advanced features can I add to enhance my chatbot’s functionality?

You can implement multi-turn conversations to maintain context throughout dialogs, integrate with external APIs for real-time data access, and add a distinct personality to make interactions more engaging. These features help create a more natural and versatile chatbot experience, improving user satisfaction and engagement.

Q5. How important is testing and refinement in chatbot development?

Testing and continuous refinement are crucial for creating effective chatbots. Thoroughly test your chatbot to ensure it responds correctly to various inputs and scenarios. Collect user feedback, monitor performance metrics, and make iterative improvements. Start with basic implementations and gradually add advanced features as you gather more insights and understanding of user needs.